With the announcement of Apple's Child Abuse Prevention Kit recently, a new debate has emerged in the technology world.

Whatsapp CEO Will Cathcart , Apple's software recommendationprivacy said it was a clear violation and presented the world with something alarming, adding that Whatsapp would not accept this system.

But besides this discussion World Health Organization in 2020, close to 1 billion children between the ages of 2-17 will experience physical, sexual or emotional violence in 2019 exposed. According to World Vision's data, 1 billion 700 million child abuse is reported.

This is where child abuse has visibly increased. Apple's Child Abuse Prevention Kit What (CSAM) offers to users;

Child Sexual Abuse Prevention Kit (CSAM), Apple is helping to protect children and empower them and enrich their lives while helping keep them safe from those who recruit and exploit children using their communication tools. aims to create technology.

Developed in 3 areas child sexual abuse prevention kit, to parents in children's online actions will play a role in helping them and raising their awareness.

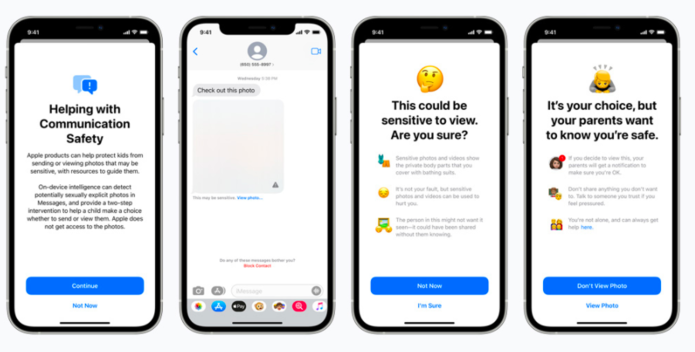

The Messages app will use on-device machine learning to alert about sensitive content while keeping private communications unreadable by Apple.

Messages app when sexual content comes to the child's phone, the photo will be blurred, the child will be warned, helpful resources will be presented, and photo if she doesn't want to see will be assured that it won't be a problem. As an added precaution, tell the child that if they see will get a message can also be said. There will be similar protections if a child tries to post sexually explicit photos. The child will be warned before the photo is sent, and if the child chooses to send the photo, their parents will receive a message.

iOS and iPadOS, user privacy the Child Sexual Abuse Prevention Kit (CSAM) to help limit the online spread of sexual abuse new encryption implementations will use. CSAM detection,About Apple's Child Sexual Abuse Prevention Kit (CSAM) collections in iCloud Photos to law enforcement valuable will help provide information.

Finally, Siri and Search updates, will provide extended information to parents and children, and with unsafe states will help when they meet. Siri and the Search engine will also interfere when users try to search for CSAM-related topics.

"neuralMatch", when Apple detects child pornography and similar materials on iPhone users' devices, it personal accounts will be deleted and directly National Child Abuse Center (NCMEC) announced.

This application will start in the USA.

Your system photos, US National Center for Disappearing and Exploited Children child sexual abuse compiled by (NCMEC) and other child safety organizations images a with database .

Images in these databases converted to numeric codes and stored and can be matched with the image on the Apple device.

Parents' without any malicious intent half-naked photos taken by the system in bathrooms and similar environments are “off-target” will be accepted, so iPhone users do not need to worry.

All these descriptions seem to be the fault of some experts. privacy of personal information no worries.

At Johns Hopkins University security researcher Matthew Green, “Apple They have made a very clear signal by announcing that they will use this system, whatever their long-term plans: It will be free to install system on users' phones to watch banned content ” and adds:

"It doesn't matter if they're right about it or not. It means crossing a threshold and governments will demand it from everyone."

As Apple's implementation of this a big step towards preventing child abuse is seen as that innocent people can be harmed and states may demand more data suggests considerations.

Source:

cumhuriyet.com.tr

reuters.com

tr.euronews.com

indyturk.com

apple.com

[vc_row][vc_column][vc_cta h2=”” add_button=”bottom” btn_title=”TIKLAYIN” btn_style=”flat” btn_shape=”square” btn_color=”danger” css_animation=”fadeInLeft” btn_link=”url:https%3A%2F%2Fcyberartspro.com%2Fteklif-isteme-formu%2F||target:%20_blank|”]KVKK, ISO 27001, Bilgi ve İletişim Güvenliği Rehberi, ISO 27701, Bilgi Güvenliği, Siber Güvenlik ve Bilgi Teknolojileri konularında destek ve teklif almak için lütfen[/vc_cta][/vc_column][/vc_row]